The Robotics and Interactive Systems Engineering (RISE) Laboratory focuses in the design and development of Human-Machine Collaborative Systems (HMCS) and the study of human-machine haptics. We design and develop robotic systems that amplify and/or assist human physical capabilities when performing tasks that require learned skills, judgement, and precision. The systems seek to combine human judgement and experience with physical and sensory augmentation using advanced display and robotic devices to improve the overall performance. In haptic research, we are interested in understanding the human interaction with the physical (or virtual) world through their senses of touch. Our research aims at exploring the aspects of human dynamics and psychophysics that affect the human-machine interaction, such as force control and perception, and are essential to the advancement of future human-machine systems. The application of the research extends to numerous areas including medical and biomedical robotics, haptic (force-feedback) interfaces, simulation and training devices, and computer-aided manufacturing.

Recent Projects:

Immersive

virtual reality with CAVE VR system

The establishment of the CAVE VR lab was supported

by a NSF MRI grant (Award # 1626655) to acquire a virtual environment (VisCube M4 CAVE system) that facilitates interdisciplinary

research in human-machine interaction. The room-size system includes a

four-wall projection system, eight-camera real-time motion capture system, and

a control software suite that allows customized software development and

additional hardware integration. The research activities enabled by the CAVE

system include a wide range of applications in transportation system,

rehabilitation, sport training, advanced manufacturing, and overall design of

human-machine interfaces. For current projects on the CAVE system, please visit

BeachCAVE website.

Vibrotactile device for enhancement of residual limb proprioception in lower-limb amputees

The research focuses on the development of a vibrotactile feedback device and rehabilitation training methods to build new motor learning strategies in persons with lower-limb amputation. Since individuals using prostheses have limited proprioception in the amputated limb and must utilize friction and pressure sensations felt at the skin-socket interface to perceive the state of their prosthesis. For lower-limb prosthetic users, this loss of proprioception can affect stability and can contribute to falls. As a training device, the vibrotactile device, which can be attached to any prosthesis, will generate vibration on the prosthesis that can be felt at the skin-socket interface to simulate collisions of the prosthesis with external objects.

Clinical Collaborators: Dr. I-Hung Khoo (Electrical Engineering, CSULB), Dana Craig (Gait and Motion Analysis Lab, VA Hospital, Long Beach) and Brian Ruhe, Ph.D., CP (Assistant Professor, Orthotics and Prosthetics Program, CSU-Dominguez Hills), Dr. Will Wu (Kinesiology, CSULB).

Funding Source: California State University Program for Education and Research in Biotechnology (CSUPERB) Joint Venture Grant

User Input Device with Haptic Feedback for NASA NextGen Flight Controller

(Cockpit display image obtained from NASA Ames Human Factors Research and Technology Division)

This project involves a development of a user input device to be used with the 3D Volumetric Cockpit Situation Display (CSD) developed by NASA as part of the Cockpit Display of Traffic Information (CDTI) program. As intended to be used in the cockpit, the advanced features of the CSD, such as 2D-to-3D manipulation and real time path planning, place a limit on the use of traditional input devices, such as a mouse and a keyboard. In addition to the device development, we are also interested in incorporating other user feedback modalities including audio and haptic (force and/or tactile) feedback to enhance the user experience and performance outcome. The research will explores new design criteria for the next generation of human input device that can incorporate 3D information display with multimodal feedback modalities for real time flight planning. Currently, the Novint Falcon is used as a testbed for new prototype development and evaluation of haptic feedback modalities.

Students: Jose Robles (MS-ComE), Eric Park (MS-CS), Paul Sim (BS-CS)

Collaborators: NASA URC - Center for Human factors in Advanced Aeronautics and Technologies (CHAAT) with PIs, Dr. Thomas Strybel and Dr. Kim Vu (Department of Psychology, CSULB)

CHAAT Website: www.csulbchaat.org

Funding Source: NASA Group 5 URC

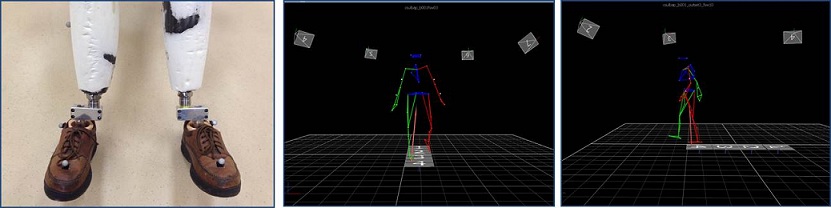

Gait Analysis of Transtibial Unilateral and Bilateral Amputees

The project involves analysis of gait characteristics and evaluation of the effect of alignment variables including prosthetic foot size and outward foot rotation on the rehabilitation outcome of individuals with bilateral below-knee amputation. The project objective aims at quantifying the key differences (or similarities) in the kinematic and kinetic characteristics of walking gait between bilateral and unilateral BK amputees. The research findings can lead to improvements in bilateral amputee gait patterns and to provide field guidance and possible robotic assistance for optimization of prosthetic alignment in bilateral amputees. The gait data will be collected using a VICON capture system with built-in force plates from unilateral and bilateral BK amputees and persons with natural limbs at the Gait Lab at the Long Beach Veteran Affairs Medical Center. A set of custom MATLAB programs are being developed to convert the raw motion and ground reaction force data into usable parameters to verify our hypotheses.

Clinical Collaborators: Dana Craig (Gait and Motion Analysis Lab, VA Hospital, Long Beach) and Brian Ruhe, Ph.D., CP (Assistant Professor, Orthotics and Prosthetics Program, CSU-Dominguez Hills)

Funding Source: California State University Program for Education and Research in Biotechnology (CSUPERB) Grant

Past

Projects:

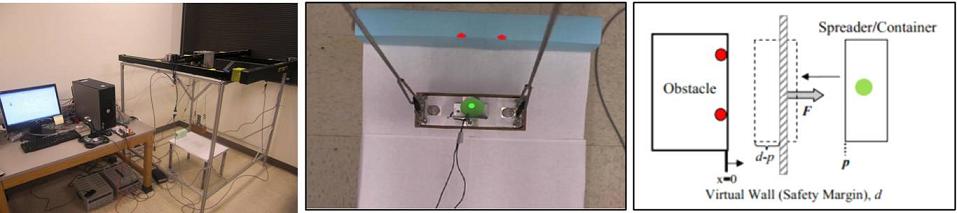

Feasibility Study of Haptic Guidance for Port Crane Operation

The project involves a feasibility study on an integration of a multimodal (haptic, video, and audio feedback) user interface to assist the crane operator during container handling. This work focuses on the evaluation of sensor placement location, types of feedback (primarily haptic and video feedback) and the design and construction of a scaled testbed. The guidance will be provided as an overlay of sensory information and commands that can be overridden by the crane operator to improve the operation efficiency and safety during the container loading and unloading process. The work includes evaluation of appropriate feedback modalities that most effectively communicate the computed guidance information to the operator and development of algorithms and sensor integration to best provide position and guidance information of the target container and its surrounding.

Student: Vinay Gangi (MS-EE), Alfred Monterrubio (MS-EE)

Collaborators: Dr. Henry Yeh (Department of Electrical Engineering, CSULB)

Funding Source: METRANS

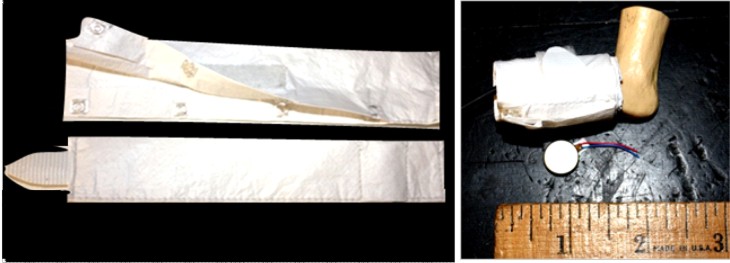

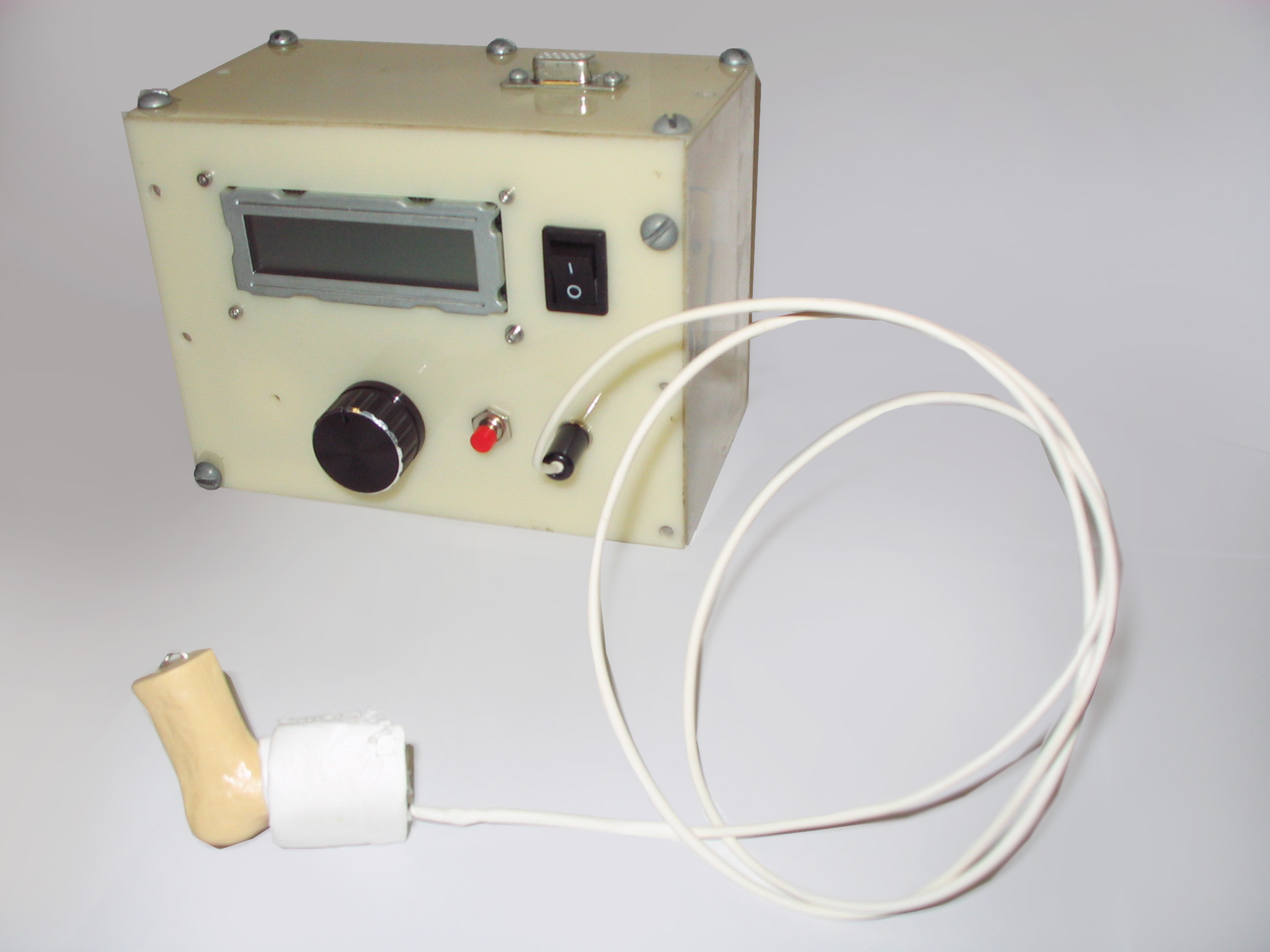

Closed-loop Vibrotactile Device for Treatment of Apnea in Premature Infants

Central apnea is a respiratory disorder commonly found in premature infants whose central nervous system is not fully developed. The condition causes a sudden stop in breathing, which if not treated in time, can lead to hypoxia and serious brain damage. An apnea episode is detected by observing the associated physiological responses, such as a prolong drop in heart rate (BPM), blood oxygen level (SpO2) and chest impedance. Currently, there is no standard method of treating apnea; however, a common treatment is to wake the baby up by rubbing the baby in sensitive areas, such as the back or the soles of the feet. Despite its effectiveness, manual stimulation requires constant attention of the clinical staff and can be inconsistent and non-hygienic. Our system is developed to replace manual simulation. The system automatically detects and provides initial treatment of apnea through adjustable tactile simulation provided by the vibrotactile unit. Vital signals measured from a pulse oximeter, including heart rate and SPO2, are used to detect an apnea episode and control the activation/deactivation of the vibrotactile unit.

Medical Collaborators: Dr. Maryam Moussavi (EE), Dr. Rowena Cayabyab (USC Women's and Children's Hospital) and Dr. Phuket Tantivit (Baptist Children's Hospital, Miami, Florida)

Educational Haptic Robot using MATLAB and Simulink

The project involves a development of educational haptic (force-feedback) device using MATLAB/Simulink. The haptic device first developed by Dr. Allison Okamura (Johns Hopkins University), called the haptic paddle, allows users to physically interact with simulated mechanical systems. Forces from the virtual system are generated by a computer model providing the user with a real-time and bidirectional interaction at a low cost. The haptic paddle is further developed at Dr. Robert Webster's laboratory at the Vanderbilt University for improved design and ease of integration with MATLAB and Simulink. The development at CSULB involves designed virtual simulations to cover more topics in engineering both at the undergraduate and graduate level. The system will be used to teach engineering students and evaluated for its teaching effectiveness.

Collaborators: Dr. Robert Webster (Department of Mechanical Engineering, Vanderbilt University)

Funding Source: MathWorks

Project website: Vanderbilt University (Main page), CSULB

Vision-based Robotic Guidance for Sealant Application

The project is in collaboration with Boeing engineers at the Center for Advanced Technology Support for Aerospace Industry (CATSAI) involving the development of a robotic workcell for automated sealant application.

Students: James Liu (ME, MS), Luis Caldron (BSME) and Martin Guirguis (BSEE)

Collaborators: Eric Whinnem, Angelica Davancens, and Branko Sarh (The Boeing Company), Profs. Bei Lu and Hamid Hefazi (CSULB)

Effect of

Hand Dynamics on Virtual Fixtures for Compliant Human-Machine Interfaces

|

The goal of human-machine

cooperative systems is to enhance user performance in tasks at the limits of human

physical abilities. Cooperative systems combine the precision and the

repeatability of a robot with the intelligence and experience of a human

operator. Virtual fixtures are added to a cooperative system to guide the

end-effector along desired paths in the workspace (guidance virtual fixtures)

or to prevent the end-effector from entering undesired regions

(forbidden-region virtual fixtures). In cooperative manipulation systems,

the user and the robot simultaneously grasp the end-effector/tool. The human

user applies a disturbance force to the robot in order to achieve motion.

Despite the high rigidity and non-backdrivability of

an admittance-controlled system, small joint and link compliance visibly

degrade virtual fixture performance. The virtual fixture location becomes

incorrectly defined. In addition to the deflection of the robot due to

voluntary motion, the inherent mechanical parameters of the human hand also

generate dynamics that result in undesired involuntary motion.

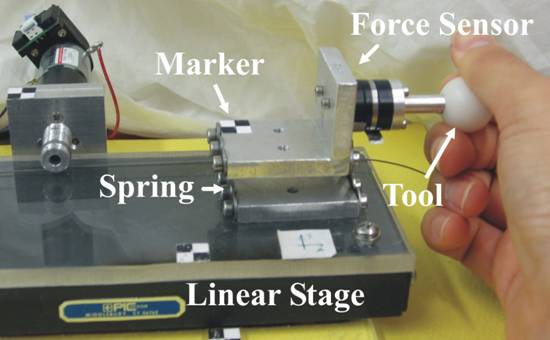

To investigate virtual fixture performance with hand dynamics, a 1-DOF

admittance-controlled testbed was used. A spring element was added to simulate

joint compliance. Two open-loop controllers were proposed to define a virtual

fixture position that prevents the user from entering the true forbidden

region: one that compensates for hand dynamics, and one that predicts overshoot

based on the user's current velocity. The methods determine a 'safe' location

of the virtual fixture that prevents the user from entering the true forbidden

region. The virtual fixture can be viewed as a safety margin whereby its

distance to the true forbidden region can vary depending on the dynamics of the

system.