Abstract

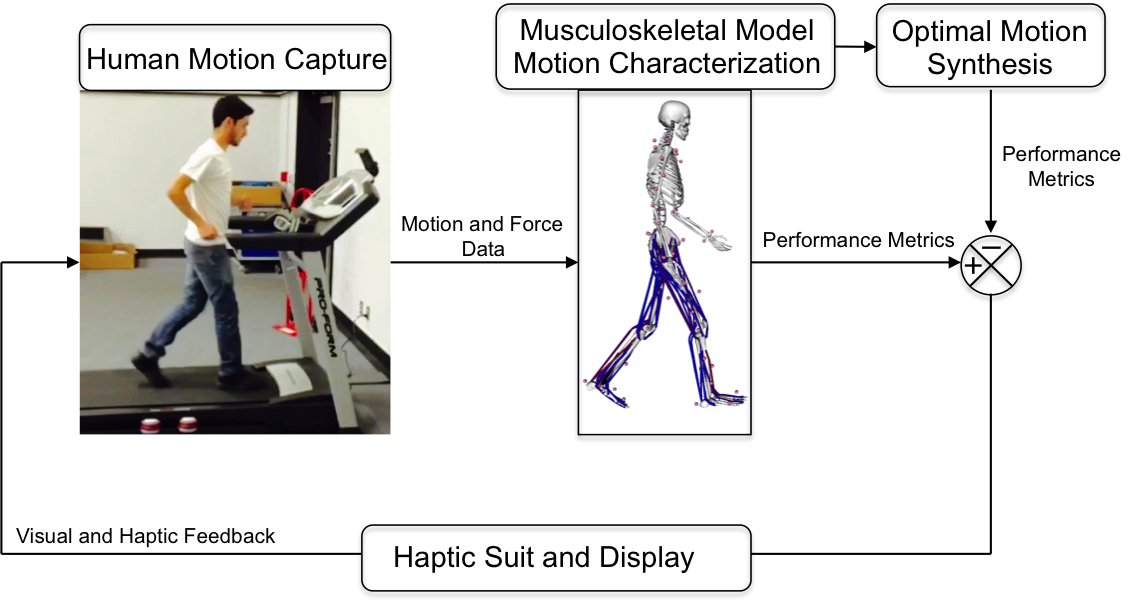

The goal of this research is to create a cyber-human framework that advances both robotics and biomechanics, by deepening our scientific understanding of human motor performance dictated by musculoskeletal physics and neural control, in order to assist clinicians in quantifying the characteristics of a subject's motion and designing effective motion training treatments. Current technologies do not permit detailed motion reconstruction in real time, which limits their use in clinical settings. This work will combine theory with software, hardware and sensing technology to synthesize human motion with dynamic, actively controlled subject-specific musculo-skeletal models and to provide real-time visual feedback to a human subject.

The project will deliver open-source algorithms and metrics for quantifying human performance and for understanding the underlying motion characteristics that modify these metrics. The capabilities developed in this project will have a transformative impact on society by enabling real-time human motion synthesis, with potential applications in rehabilitation, physical therapy, human-robot interaction, kinesiology and occupational biomechanics. The new control framework and models, validated by motion capture experiments, will be disseminated to researchers through an online repository. Integration of the research with educational activities will equip involved undergraduates and underrepresented students with new insights and tools for developing future engineering research in a minority serving institution.

Project outcomes will include: (1) computational models of the human musculoskeletal system for task-based control; (2) integrated performance metrics for motion characterization based on a subject's physiological constraints; and (3) control and simulation algorithms to synthesize movement using biomechanical models that accurately match experimental data, compensate for measurement errors, and visualize the model and its motion in real time. To these ends, task-based models of human motion will first be created. Motion capture experiments will be conducted to validate the model and to fine tune subject-specific parameters.

The resulting computational platform will then be used to determine long-term performance statistics and metrics to efficiently characterize human motion. In the second phase, robust control and simulation algorithms will be integrated with the computational system to synthesize movement using biomechanical models. The framework will be used to identify feasible modifications to improve subject-specific motion characteristics. Finally, these criteria will be integrated into a feedback mechanism that will visually suggest modified trajectories for optimal motion to the subject.